Unconscious inference in motion perception

People show systematic biases in perceived speeds and directions of motion under many conditions when objects are hard to see—for example, under low contrast conditions like driving in the fog or rain. Under these low contrast conditions, object speeds appear to move slower than they actually are in the world. This phenomenon has most commonly been explained as an example of unconscious perceptual inference: the brain is constantly building a model of object speeds in the world that is a combination of current speed information coming in from the eyes (weighted by uncertainty in that measurement) and expectations about speeds (based on previous experience of how often speeds are encountered in the world).

Another major component of these perceptual speed biases lies in the transformation of retinal speeds into world speeds. An ideal observer should perform speed inference in world-centered coordinates, which requires a transformation of the uncertainty in the retinal measurements into uncertainty in world speeds. The degree to which this uncertainty is scaled depends on distance and retinal eccentricity, which predicts that contrast-dependent speed biases should increase with viewing distance.

Published reports

- Manning TS, Pillow JW, Rokers B, Cooper EA. (2022) Humans make non-ideal inferences about world motion. Journal of Vision 2022;22(14):4054. doi: 10.1167/jov.22.14.4054.

- Manning TS, Pillow JW, Rokers B, Cooper EA. Contrast-dependent speed biases are distance-dependent too. In Prep.

Optimal neural codes for binocular disparity

Binocular disparity—the difference between where the same point in space falls on the two eyes—is a useful visual cue for creating a vivid percept of the world in 3D. How does the brain encode this cue though to maximize its usefulness for vision? In this collaborative project with Dr. Emma Alexander, Dr. Gregory DeAngelis, and Dr. Xin Huang we use a combination of image statistics and information theoretic analysis of neuronal responses to disparity in different brain areas to answer this question.

In short, there is a balancing act in the brain between: 1) the precision with which neurons in the brain can represent a physical variable, 2) the amount of metabolic resources a neural population requires, and 3) the loss function used to shape the population's selectivity (e.g. should the brain maximize sensory signal fidelity or discriminability?). We believe that there is a fundamental change in the loss functions guiding sensory encoding as one ascends the roughly hierarchical network of the primate visual system.

Published reports

- Manning TS, Alexander E, Cumming BG, DeAngelis GC, Huang X, Cooper EA. (2024) Transformations of sensory information in the brain suggest changing criteria for optimality. PLoS Computational Biology. doi: 10.1371/journal.pcbi.1011783.

- Manning TS, Alexander E, DeAngelis GC, Huang X, Cooper EA. (2021) Role of MT Disparity Tuning Biases in Figure-Ground Segregation. Poster presented at Society for Neuroscience, Chicago, IL.

Code repositories

- Analysis [Code]

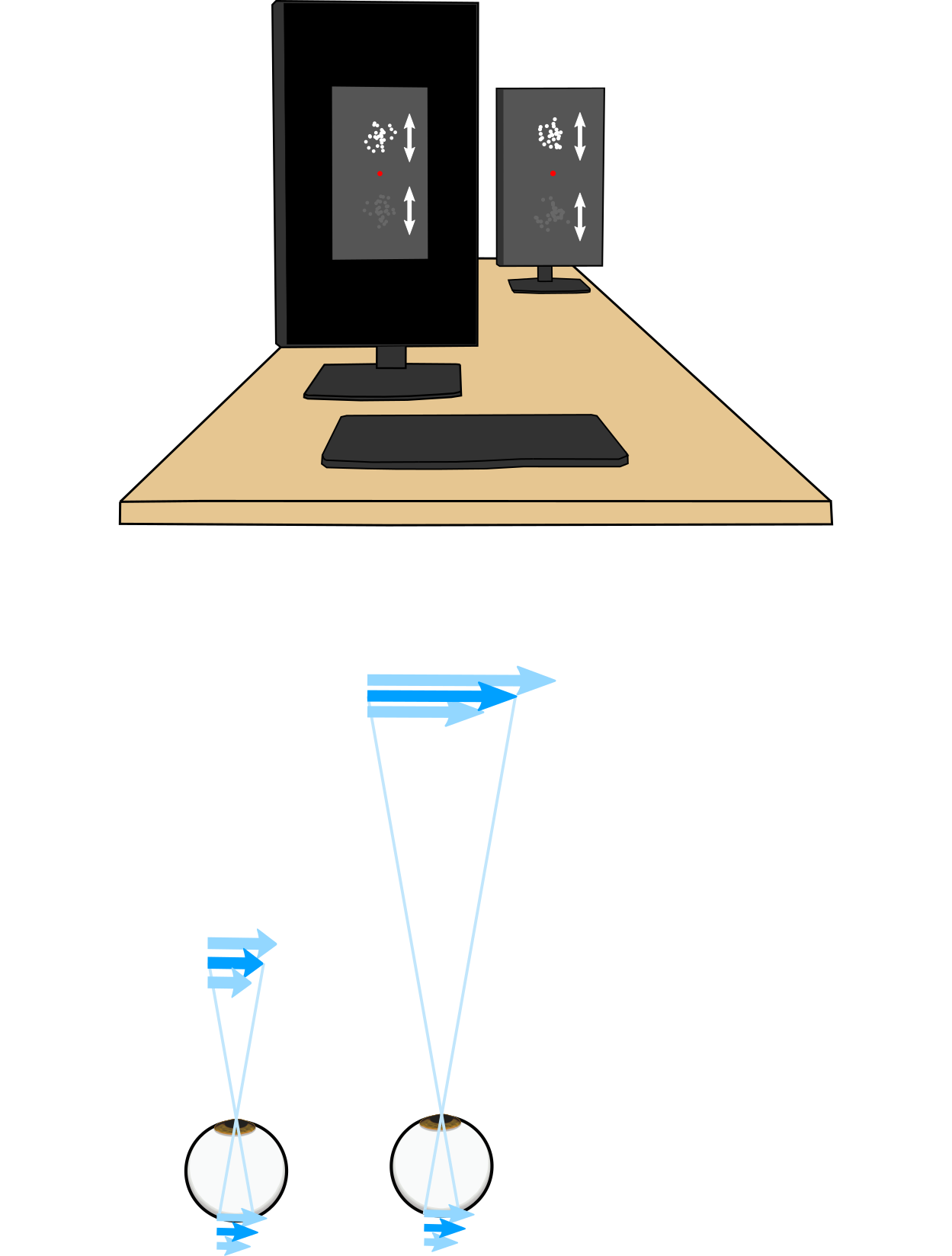

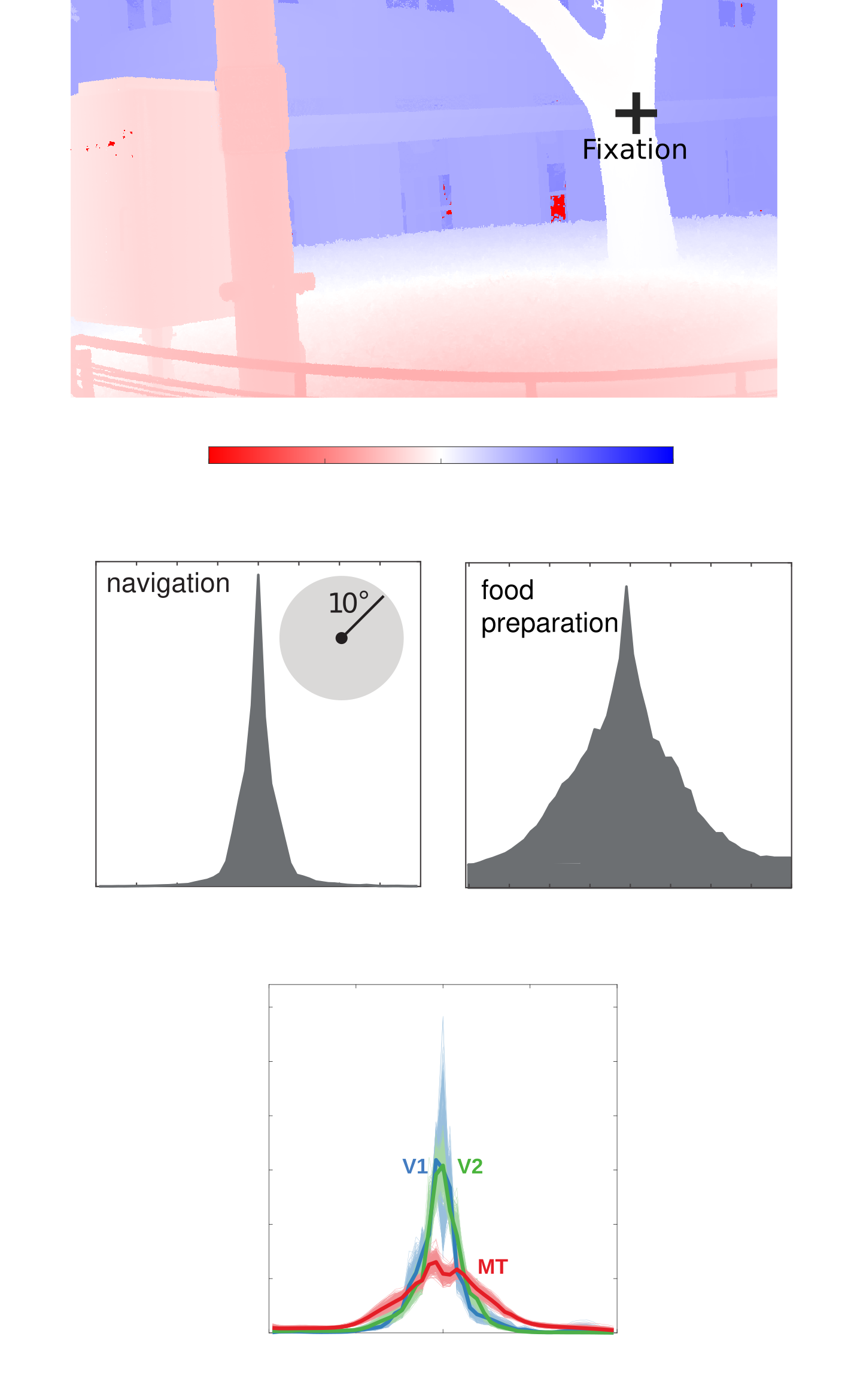

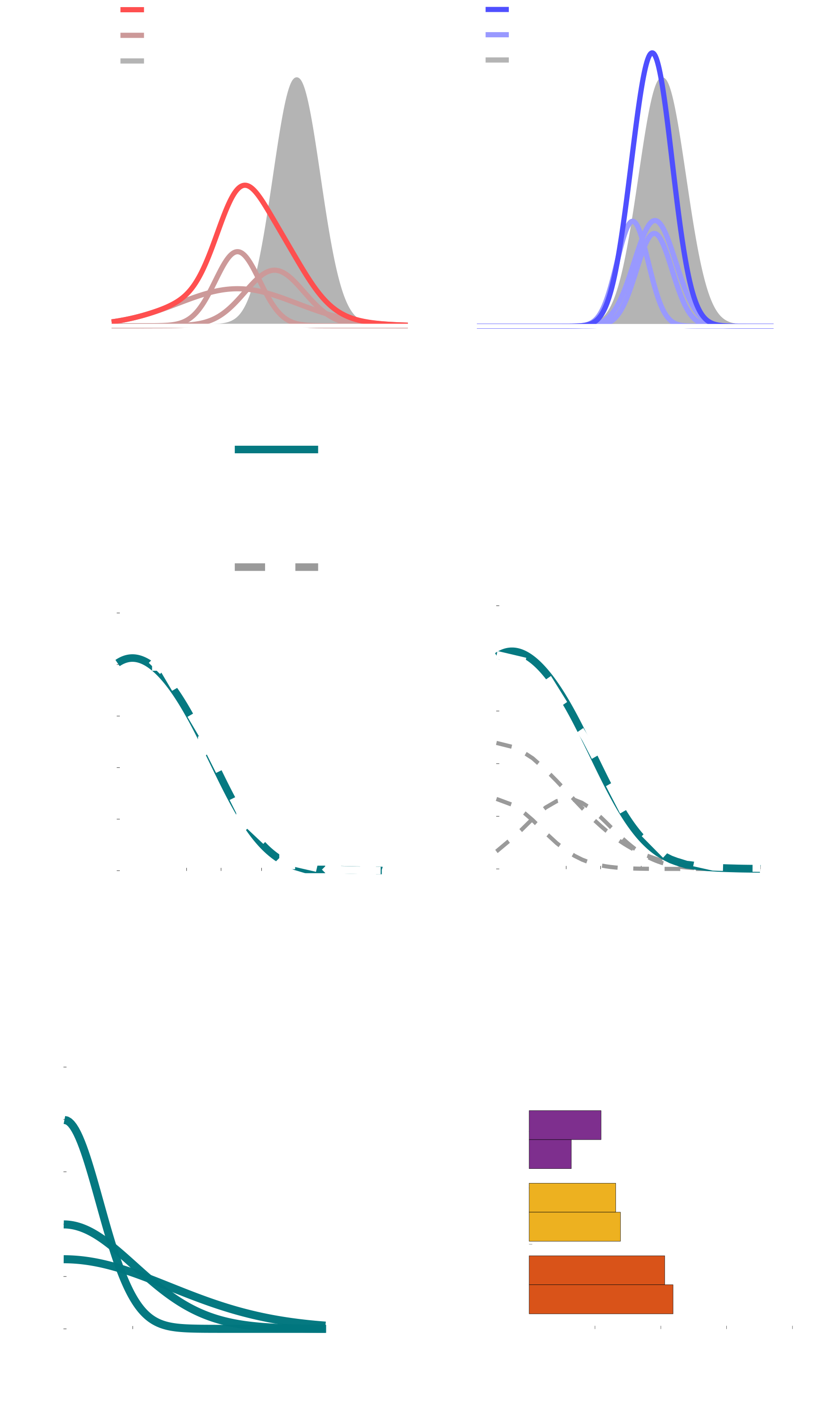

Improved methods for estimating perceptual priors

The goal of psychophysics is to define a lawful relationship between externally quantifiable aspects of the world (like luminance, distance, mass, etc.) and our perception of the world based on noisy measurements from our biological sensors. This problem has direct analogs in signal detection, computer vision, etc. and as such, human psychophysics research has used a lot of the same computational methods for modeling the brain with ideal observer frameworks. One aspect of perception that remains less well defined is the set of assumptions the brain makes while interpreting these sensory inputs—these assumptions are commonly called perceptual priors. These assumptions are thought to underlie the systematic biases that people exhibit when making judgments about things like the angles of contours or the speed of objects or their own self-motion.

Perceptual priors are often assumed to represent the brain learning statistical regularities in the environment (e.g. the distribution of contour orientations or object speeds). It remains an open question how well our priors capture these statistics (as measured by head-mounted cameras, etc.) and how much these priors vary between individuals. One issue in resolving this question is the limitations of the mathematical tools used to estimate them. Researchers have either nonparametrically derived priors or define them with parametric functions like a Gaussian or piecewise linear functions. This project had two main goals: 1) to define the accuracy and precision with which we estimate priors (so we can asses how much they vary between people and how well they match stimulus statistics) and 2) adapt existing statistical methods from other fields for use in perceptual science so we are less constrained by the range of possible priors we could faithfully estimate.

Published reports

- Manning TS, Naecker BN, McLean IR, Rokers B, Pillow JW, Cooper EA. (2022) A general framework for inferring Bayesian ideal observer models from psychophysical data. eNeuro. doi: 10.1523/ENEURO.0144-22.2022.

- Manning TS, McLean IR, Naecker BN, Pillow JW, Rokers B, Cooper EA. (2021) Estimating perceptual priors with finite experiments. Journal of Vision 21 (9), 2215-2215. doi: 10.1167/jov.21.9.2215.

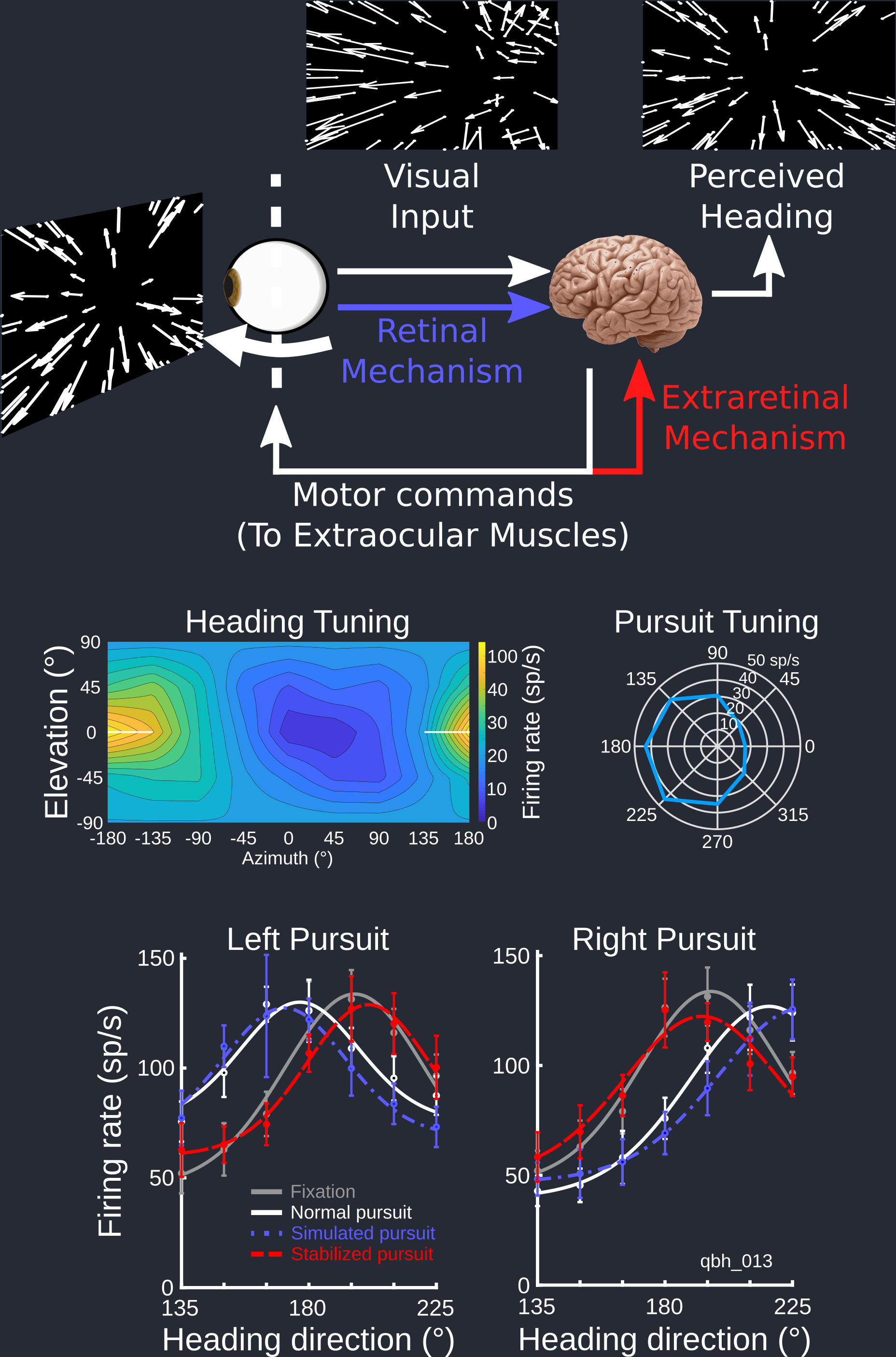

Extracting heading from retinal images during eye movements

The primate visual system has evolved to be highly sensitive to optic flow cues—complex patterns of motion that arise from an observer moving through the world—to create a vivid percept of self-motion. At the same time, we have also have highly motile eyes that constantly select new aspects of the visual scene on which to focus the high-resolution fovea. These eye movements produce additional motion on the retina that distorts the optic flow patterns arising from locomotion, potentially giving the visual system incorrect information about heading. However, we manage just fine navigating the world, the brain must have a way to extract heading information from these distorted images.

This extraction process could use a forward model of the expected distortions from an impending eye movement (i.e. based on a extraretinal mechanism) or may simply extract heading-related components of the moving image based on differences in motion patterns caused by eye movements and locomotion (i.e. based on a retinal mechanism). We investigated which of these two theories was best supported by recording from heading-sensitive neurons in the macaque monkey brain while the monkeys tracked targets with their eyes that moved in front of an optic flow stimulus.

Published reports

- Manning TS, Britten KH. (2019) Retinal stabilization reveals limited influence of extraretinal signals on heading tuning in the medial superior temporal area. J Neurosci. 39 (41) 8064-8078. doi: 10.1523/JNEUROSCI.0388-19.2019.

- Manning TS. (2019) Neural Mechanisms of Smooth Pursuit Compensation in Heading Perception. University of California, Davis ProQuest Dissertations Publishing. 13882251.

Code repositories

- Analysis [Code]